From its inception in 1985, Altair has

focused on delivering innovative products

and services to its customers. The

company has not only written robust computer-

aided engineering (CAE) software

but also built its renowned simulation

portfolio, HyperWorks®, through acquisitions

and partnerships.

As a result, the company’s solver technology enables users to achieve more efficient structural designs. Its modeling and visualization tools are fast and realistic, empowering engineers to investigate ever more concepts and resolve problems quickly. What’s more, through Altair’s patented, units-based licensing system, engineers can work the way they choose – on devices ranging from cloud-based supercomputers to hand-held devices.

Modeling and Visualization: Realistic Results

In 1985, the auto industry was reawakening after an economic downturn, and CAE tools were just starting to gain acceptance. Three contract engineers – George Christ, Mark Kistner, and Jim Scapa – formed Altair to help automakers understand and benefit from this new engineering technology.

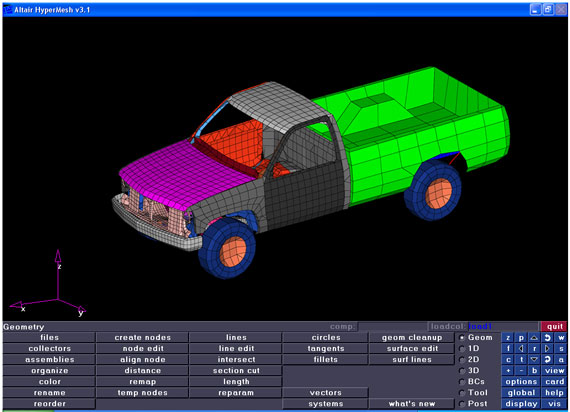

Finite-element models of the 1990s were typically coarse models consisting of a few thousand elements. Altair HyperMesh circa mid 1990s is shown above.

Finite-element models of the 1990s were typically coarse models consisting of a few thousand elements. Altair HyperMesh circa mid 1990s is shown above.

But it wasn’t long before these engineering consultants and their team realized that the existing CAE solutions were far from ideal, so they decided to develop their own software in 1988. By 1990, the company released HyperMesh®, a finite-element pre- and post-processing package to virtually design and engineer products. It was 20 times faster than competing products.

James Dagg, Altair CTO, User Experience, recalls that in 1987, part of his job at Altair was to write software – in Fortran – that automated the existing tools of the day.

Dagg recalls, “The state of the art was using very small models, perhaps a few thousand elements. We would study small numbers of components within a subsystem using linear analysis, just to see what the stresses were under a few load cases. You would construct the models by measuring coordinates of points on blueprints and enter those coordinates into the software by typing, then create finite elements pretty much by hand.”

Dagg explains that the results were mostly static. In the early 1990s, though, Unix-based workstation vendors introduced custom 3D graphics that enabled elementary levels of animation and visualization. Since the memory of such machines was measured in megabytes, not gigabytes, a big model would have been under 100,000 elements. Over time, compute power revved up, and the use of graphics languages such as OpenGL became widespread. The CAE community took advantage of it.

Dagg further explains that with the release of HyperMesh, which took advantage of leading-edge hardware and an innovative user interface, engineers could construct and visualize much larger finite element models far more rapidly. That led to a huge change in the pace and scale of modeling and simulation.

“Our software is driven by engineers. Altair is an engineering company, which really influences the thinking that goes into our solutions.”

– James Dagg, Altair CTO, User Experience

In the new millennium, hardware vendors began developing cards with specialized graphical processing units (GPUs) that excelled at getting graphics up on the screen quickly. The graphics card memory kept getting faster, as did the amount of computations that could be performed. Driven by the needs of the gaming industry, the “tuning” of the graphics cards became more optimal for the technical community. As a result, the faster modeling and visualization led to engineers conducting more explorations to devise different concepts and resolve problems.

According to Dagg, “People expect their software tools to respond immediately when they interact with them. They don’t want anything to interrupt their creative flow. That means that the performance of the software and hardware has to be not only terrific but also that the workflows are intuitive, efficient and responsive.”

Today’s finite-element models contain millions of elements to accurately understand and optimize system-level performance. New HyperMesh user experience (above) coming soon.

Today’s finite-element models contain millions of elements to accurately understand and optimize system-level performance. New HyperMesh user experience (above) coming soon.

In the 1980s and 1990s, the way in which engineers interacted with their models was not very efficient by today’s standards. So Altair has focused on building a better user interface for engineers. “Our imperative,” says Dagg, “is to make the new tools easy to discover, quick to learn, fun to use and highly productive.”

And how has the scope of modeling changed over the decades? According to Dagg, “Simulation not only must handle very large system models, like complete oil rigs as well as full automotive and aircraft models, but also account for the very small details – all the way down to the micro-structural aspects of the materials. Altair software enables users to actually model the fibers and voids within the materials themselves, enabling us to better understand, and address, material failure.”

What’s more, engineers are not necessarily looking at just a single subsystem or product anymore but how products interact with one another and how they’re controlled. “Rather than doing isolated studies,” says Dagg, “we’re often dealing with a systems-level view of products in their environments.”

That trend is paving the way for working with a “common model” – versus models created by separate engineering disciplines – and the ability of users to perform multiphysics simulation. “Ultimately,” Dagg explains, “we need to consider all the performance attributes of a design simultaneously to arrive at a truly optimal design that balances all the tradeoffs between safety, performance, cost, manufacturability, etc.”

Dagg and his team are also focused on capturing engineering knowledge and embedding it in their software. He cites the example of the Altair HyperWorks Virtual Wind Tunnel solution, which incorporates sophisticated computational fluid dynamics (CFD) technology behind a very simple user interface that automates the setup of a vehicle in a wind tunnel test chamber for non-experts.

“We’ve spent the last three or four years,” says Dagg, “deeply understanding how wind tunnel staffs work – how they need to set up tests and the factors that influence their solutions. Their knowledge is built on top of the base software. In a typical scenario, a user can park a model of a car into a wind tunnel displayed on the screen and type in the wind speed and a few parameters. The software delivers a solution in a few hours.

“Our software is driven by engineers,” Dagg concludes. “Altair is an engineering company, which really influences the thinking that goes into our solutions.”

Solvers: Elements of Success

Üwe Schramm, Altair CTO, Solvers and Optimization, recalls that in the 1980s, engineers in some industries were building models consisting of a few thousand elements in their analysis process. Often, every node had to be typed in by hand. Jobs were run on expensive mainframe computers, and it could take days before results were received.

In the 1990s, design was driven by physical experimentation at large companies, such as the automakers. According to Schramm, no design decisions were made based on computational simulation. Instead, engineers matched the results of physical tests with simulations to look for failure modes or to improve computational methodology. Thus, engineering specialists, or analysts, emerged with expertise in interpreting finite-element modeling (FEM) and finite-element analysis (FEA) results.

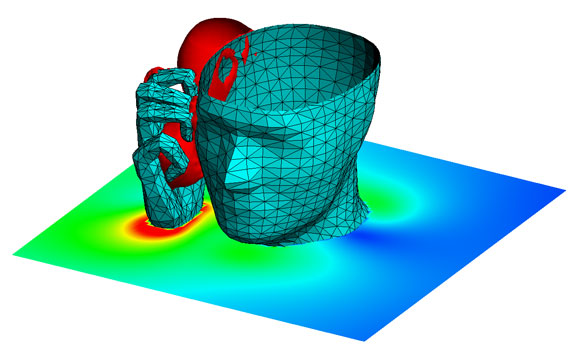

Solvers like Altair’s FEKO for electromagnetic simulation have advanced to solve broad and complex classes of problems.

Solvers like Altair’s FEKO for electromagnetic simulation have advanced to solve broad and complex classes of problems.

Although solver technology initially ran on mainframe computers, over time it became more commonplace for engineers to have workstations on their desktops. Because of the availability of model building software such as HyperMesh, engineers started running simulations on these workstations. Schramm explains that as computing platforms improved, solvers – the mathematical engines – became a lot faster, and engineers began building bigger, more complex models. These complex models have sparked the need for high-performance computing (HPC) platforms, including computing clusters (the successor to mainframe technology).

And as models have grown in size and complexity, software developers have incorporated more physics into the solvers – giving rise to the philosophy that there is not simply a need for multiphysics simulation but rather for multiphysics-driven design and optimization. Today, Altair’s HyperWorks CAE software suite includes multiphysics analysis and optimization capabilities for structures; crash, safety, impact and blast; thermal; fluid dynamics; manufacturing; electromagnetics; and systems simulation.

The 1990s also saw the academic and research communities investigate the concept of structural optimization – and they began leveraging optimization techniques with CAE (as opposed to a manual iterative process) to refine design attributes to meet program objectives. Recognizing this key trend in the industry, Altair started collaborating with the University of Michigan and its ongoing research in a new field of study known as topology optimization.

In simple terms, topology optimization – also referred to as morphogenesis, biomimicry and generative design technology – finds the best material distribution in a design space for given loads and boundary conditions. Altair released the first commercial version of its topology optimization software, OptiStruct®, in 1993 and won Technology of the Year from IndustryWeek magazine a year later for its trailblazing optimization software.

From the beginning, the developers of OptiStruct worked hard on usability and functionality to fuel adoption of its use. One area on which they concentrated was incorporating manufacturing constraints into the process. Schramm explains that this holds true today: the biggest contextual drivers for the development of OptiStruct include additive manufacturing (AM), also known as 3D printing, and the introduction of new materials, including composites.

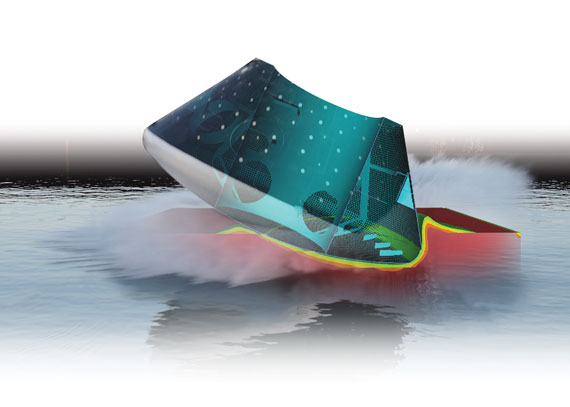

Fluid-Structure Interaction (FSI) with Altair RADIOSS makes it possible to simulate the behavior of fluids (gas or liquid) and structures simultaneously.

Fluid-Structure Interaction (FSI) with Altair RADIOSS makes it possible to simulate the behavior of fluids (gas or liquid) and structures simultaneously.

AM can actually produce the designs that are conceived via material layout optimization with little to no rework. Some processes even allow multiple materials in a single part. The 3D printing process complements OptiStruct in achieving the most efficient structural designs.

Schramm notes that composites allow designs to be highly customized for their specific use cases and loading conditions. Computer simulation and optimization work hand in hand to evaluate a limitless number of variants, taking into account laminate ply shapes, position, orientation, material and lay-up sequence to arrive at the best possible solution to meet performance, weight and cost objectives.

Schramm says, “What affects our technology development is that every product we are creating, like a computer or a car, is really a system. That is why we are looking at system-level design…we have to simulate everything that there is. What brings things together is the holistic view of the design.”

According to Schramm, future simulation software will have even more refined modeling capabilities and enable users to perform more simulations and develop better products. What’s more, as compute resources become more powerful, the software will take advantage of this, further reducing the time it takes to complete complex modeling.

Cloud Computing: A Paradigm Shift

Thirty years ago, processing power was pretty much in its infancy. Supercomputers – the machines with the top processing muscle – were platforms leased largely by the government, research and academia. They were simply too expensive for private companies to own, operate and maintain.

At the end of the 20th century, some original equipment manufacturers, like the large automakers, started to leverage these high-performance computing (HPC) platforms – supercomputers as well as “mini-supers” – for their complex jobs. These shared HPC resources enabled engineers to perform more virtual simulations and validations of their designs to reduce time to market as well as improve product quality – but typically at high cost.

The new millennium has presented a computing landscape that offers engineers more hardware options than ever before, ranging from HPC systems on the high end to mobile devices on the low end. Sam Mahalingam, Altair CTO, HPC/ Cloud, explains that as processing power, memory, networking, etc., have evolved in capabilities and become more affordable – and the Internet has become ubiquitous – Altair offers engineers another option to consider: working in the “cloud.”

Mahalingam explains that there are two primary trends driving the cloud market and making HPC platforms more affordable to users. The first is scalability, or the concept of devoting massive resources to work in tandem to solve extremely complex jobs. The second is the emergence of the “public cloud” – bringing together software as a service (SaaS), platform as a service (PaaS) and infrastructure as a service (IaaS) to minimize capital expenses and maximize the variable cost. (In contrast, “private clouds” typically are set up within companies behind their own firewalls; resources are viewed as limited.)

In taking advantage of public cloud solutions for product development, companies can be challenged by investigating a myriad of technological issues as well as perceptions about the lack of security. In addition, companies have to assess how much money they want to spend on problem-solving. In the public cloud, hardware resources are viewed as infinite – but it’s not necessarily so for software resources.

Mahalingam says, “It is generally the software licenses and the amount of money a company spends on the software that become challenges. A company can say, ‘I can get my response back in five minutes, but I need to use 20,000 nodes.’ How many licenses of those programs do you need on those 20,000 nodes?”

That’s why Altair has leveraged its existing products and services to provide private and public cloud options to customers. According to Mahalingam, Altair’s extensive application portfolio and rich HPC workload management solutions can help large, mid-sized and small customers capitalize on private or public cloud computing strategies.

For instance, HyperWorks Unlimited Physical Appliance is a private cloud solution that offers fully configured hardware and software, as well as unlimited use of all Altair software within the appliance (easy-to-implement device). Leased to customers, it shifts HPC investments from capital to operational expenses. This turnkey system comes loaded with Altair’s applications and HPC tools for simplified deployment. And, its open architecture allows for third-party solvers to be fully integrated for a monthly fee on a bringyour-

own-license (BYOL) model.

Mahalingam explains that HyperWorks Unlimited – Virtual (HWUL-V) for Amazon Web Services is a public cloud solution that brings Saas, PaaS and IaaS to users within a single portal. It integrates unlimited use of the HyperWorks CAE suite with PBS Professional®, Altair’s HPC workload manager, as well as application-aware portals for HPC access and remote visualization of big data.

“Where the Virtual Appliance really shines,” says Mahalingam, “is if you don’t have a server infrastructure on premise. Companies can start using the public cloud infrastructure straight away. All they need is a laptop and a browser to start simulating their designs.” For companies with servers on their premises, they can tap the virtual appliance whenever they have peak loads and exhaust their internal computing resources, or they can deploy the HWUL virtual appliance on their own server network to operate in a private cloud setting.

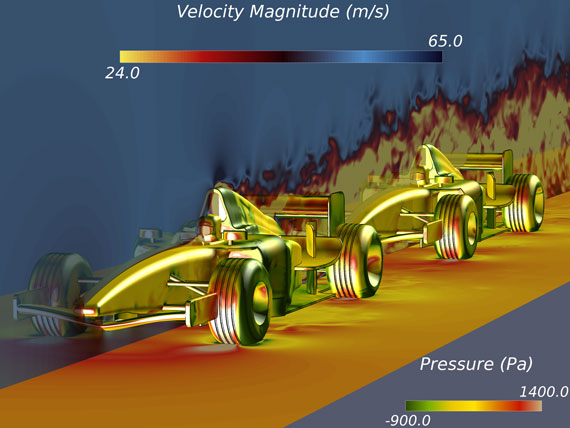

Cloud CAE appliances will drive large-scale simulation and multiphysics optimization. Shown above is a 1 billion-element CFD simulation solved with Altair AcuSolve.

Cloud CAE appliances will drive large-scale simulation and multiphysics optimization. Shown above is a 1 billion-element CFD simulation solved with Altair AcuSolve.

What makes Altair’s HPC cloud solutions innovative is the simplicity of their designs and the tie-in to Altair’s software licensing model. According to Mahalingam, “By combining hardware and software into an appliance concept, small, medium and large companies have instantaneous access to exploring their designs – and quickly bringing quality products to market.”

The Next 30 Years: Continuing to Push Boundaries

Altair Chairman and CEO Jim Scapa began his career in the late 1970s in the Detroit auto industry and remembers performing testing and simulation on automotive brakes using home-grown codes. When he and his colleagues formed Altair, Unix workstations and PCs were just starting to be adopted in engineering applications.

According to Scapa, “When we introduced HyperMesh, the software was innovative because it took advantage of new computing architecture. We brought

the models into memory, where graphics manipulation was fast. And when we ported HyperMesh to Unix workstations, the software performed 20 times faster.”

He attributes part of the success of the company to taking advantage of technological trends, such as computing infrastructure. “We have been at the leading edge of embracing new technology and introducing innovation,” says Scapa.

In addition, Altair stays on the leading edge by looking for new technology through partnerships, acquisitions, or commercializing research work, as in the case of OptiStruct. While the market initially did not see a place in industry for topology optimization, today it is a mainstream technology – and more relevant than ever as it complements 3D printing and its ability to make organic shapes and structures.

Scapa explains that the company often spends years investigating leading-edge technologies before bringing them to market: “We are very experienced and ready to create products and offerings on the business and technology sides that are well thought out and fit well (in business) because we have so many years of thought behind the offerings.” He cites Altair’s solutions in data analytics and cloud computing as examples.

Looking forward to the next decade and beyond, Scapa sees transformational changes on several fronts. “We see true simulation-driven design at the heart of the product development process,” he says. “It will liberate human creativity and provide insights interactively. CAD will be a minor piece in the whole puzzle.” He explains that whereas CAD tools often document the designs today, they will serve as the “spell checkers” of simulation tools in the future.

An emerging trend that will prove key to software development in the enterprise space – particularly in the areas of compute, process and data management – revolves around open source tools and technology. According to Scapa, the open source community will be able to accelerate the development of technology much faster in the enterprise space vs. companies pursuing proprietary approaches. As such, Altair has announced that it is opening up its PBS Professional code to the open source community (see page 22, Altair to Open Source PBS Professional HPC Technology).

The open source community also will play a role in furthering development of machine learning and analytics. As far as tools go, libraries of machine learning algorithms are more likely to come out of the open source community whereas the applications to build simulation solutions and tools to build applications will remain more proprietary.

Scapa also notes the user experience will be more intuitive and provide easier work flows in the future: “The ultimate goal is (for users) not to even notice the ergonomics of using the tools. The ergonomics will be completely unobtrusive, just like driving a car.”

After 30 years in the business, Scapa says he is just getting started. “I’m still passionate about what I am doing. We are having fun, impacting the world and the market.” And simulation users would not have it any other way.

Beverly A. Beckert is Editorial Director of Concept To Reality magazine.